The last 5 years I've been working on all kinds of new developments regarding networking for Telecom operators. For example the rise of the 'white boxes' (relatively cheap networking and server hardware that could be used with your own software) like the ones championed by the Open Compute Project (OCP), open source network control software as created by the Open Networking Foundation (ONF) and a new network data plane programming language (created by Barefoot Networks Inc. and transferred to the P4.org, now under the umbrella of the ONF) called P4. Going through this period I only missed one thing: a performant P4 based virtual switch!

Why is there a need for an open source P4 based vSwitch?

The P4 language and the current high performance hardware based switch chips supporting P4 build by Barefoot, Cisco and others allow many people to experiment and implement their own protocols again and not be dependent on big switch vendor organisations (and corresponding high cost) for implementing small scale private implementations of networking and service protocols. As Nick McKeown, Professor at Stanford University, co-founder of Nicira (Openflow!) and Barefoot Networks (P4!) and the father of Software Defined Networking (SDN), stated during his ONF Connect 2019 keynote: "Network owners took control of their software back and now take control of packet processing too!"

While on the software side there is BMv2 (Behavioural Model version 2) created by the P4.org for testing and verifying P4 language constructs and the P4C compiler implementation, at this moment there is no real high performance implementation of a P4 capable virtual switch (vSwitch). A relatively high performant fixed function vSwitch is Open vSwitch, the open source virtual switch developed on the up-rise of the first Software Defined Networking 'language' called Openflow. Openflow was great to start with coming from a 'black box' environment but more and more flexibility is required in data plane packet handling and control. The reason for its popularity is because Open vSwitch has done an incredible job of implementing all fixed function requirements controllable through a nice CLI that people where looking for in a vSwitch. Up until now! ;-)

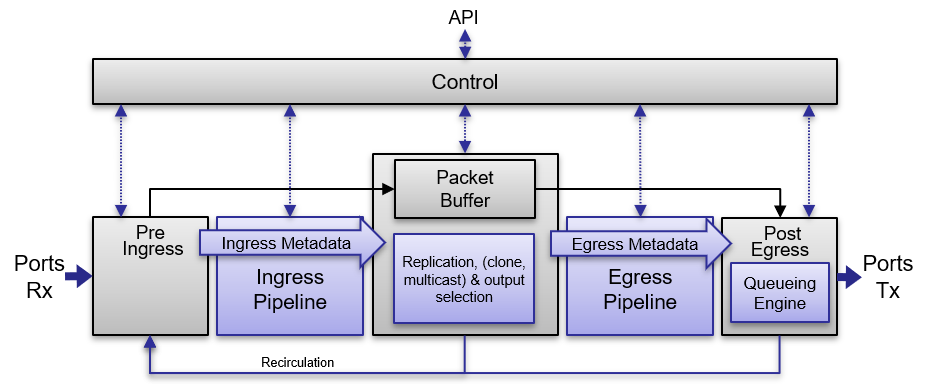

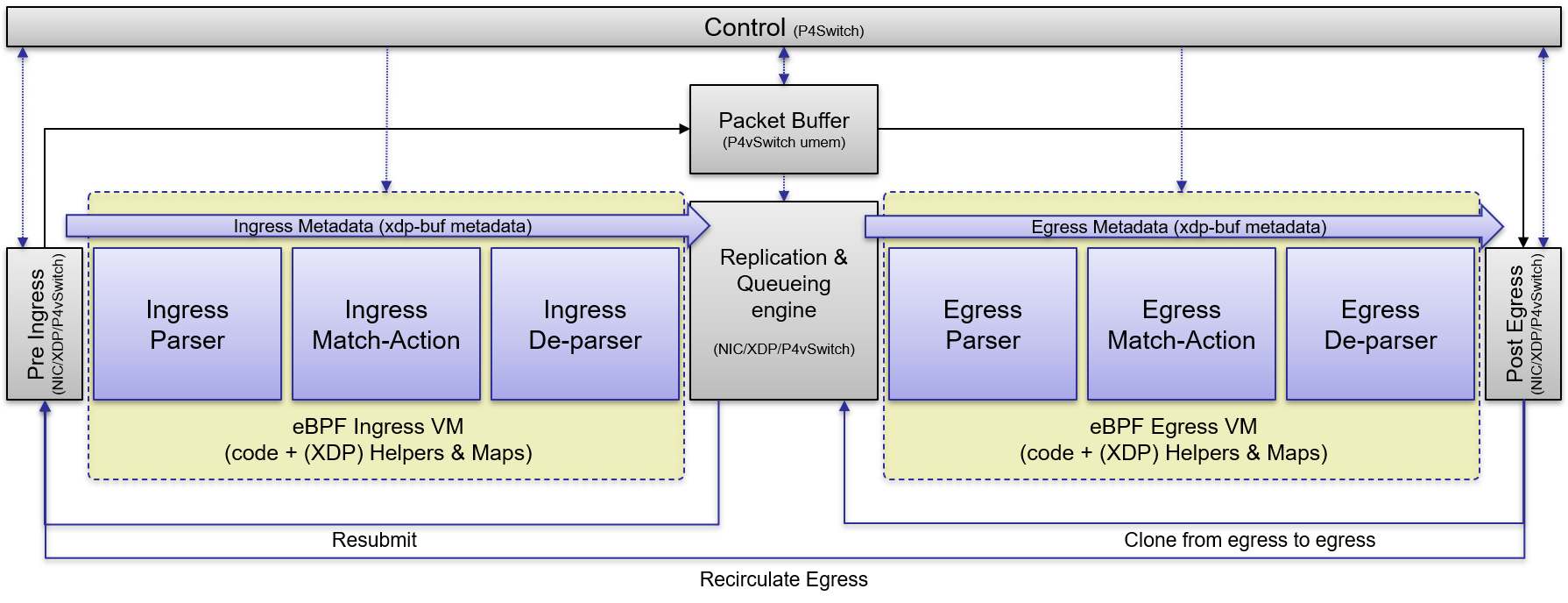

If you look into how switch chips (and also software based switches) work inside you will find the common setup as depicted in the picture below

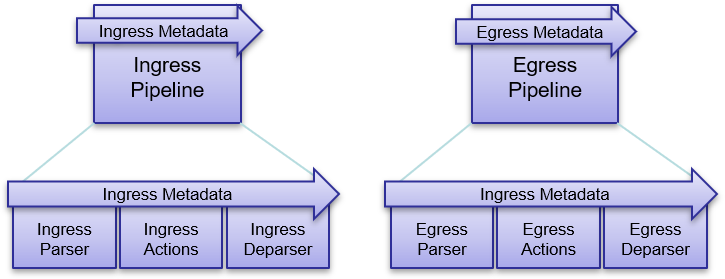

Network packets come in through ports, are pre-processed and the packet is put into some buffer. The first x number of bytes of a packet then goes through an Ingress pipeline where all the header parsing and rewriting is done depending on rules and tables set up by the Control layer. After the first pipeline, depending on metadata set, replication and output port selection is done and the packet is put into the Egress pipeline for output parsing and rewriting. In the last stage the packet is build up again by combining the new header data and the rest of the packet stored in the buffer and send out of the port.

Those Ingress and Egress pipelines are the place where the important packet handling stuff happens and is programmable in a P4 architecture. And that's fixed in Open vSwitch and can not be changed as we would like to. Another important thing the P4.org group addressed is the control API. They created an open, pipeline independent API protocol called P4Runtime based on gRPC combined with a flexible P4 compiler artefact called p4info. P4Runtime creates a flexible open Program Independent API compared to the secret proprietary fixed functions API's (or SDK) of lots of other switches.

What are my requirements for a next generation (Linux) virtual network switch?

- (P4) programmable data plane

- Modular and Open Source (lib style module)

- Generic open SDK/API interface between data plane implementation library and functional layer such that multiple implementations could be build on the same vSwitch library

- Fast switching between physical and virtual ports

- Not kernel bypass like DPDK!! (as such based on Express Data Path, XDP, and eBPF VMs)

- Don't steal the entire NIC!

- Long term architecture capable of offloading packet handling program to NIC hardware without large data plane program rewrites

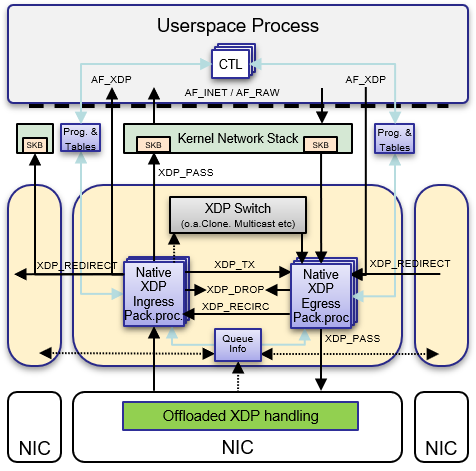

In my opinion XDP and AF_XDP are important 'technologies' for a future vSwitch implementation. So first a description of what those are!

What is XDP and how can it be used for a vSwitch implementation

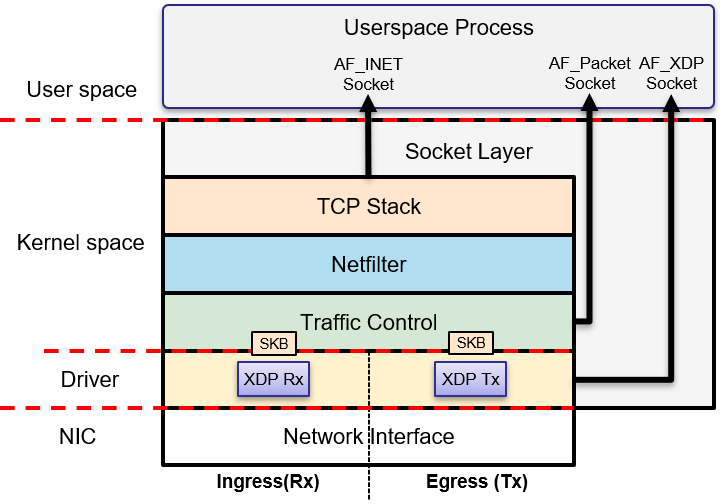

XDP is a relatively new, programmable layer in the kernel network stack at driver level. It allows for run-time programmable packet processing inside the kernel, not by kernel-bypass. The XDP programs are compiled to platform-independent eBPF bytecode and those object files can be loaded on multiple kernels and architectures without recompiling!

The important XDP implementation goals are first of all to close the performance gap to kernel-bypass solutions (but is is not a goal to be faster than kernel-bypass) by operating directly on packet buffers (like DPDK) and by decreasing the number of instructions executed per packet.

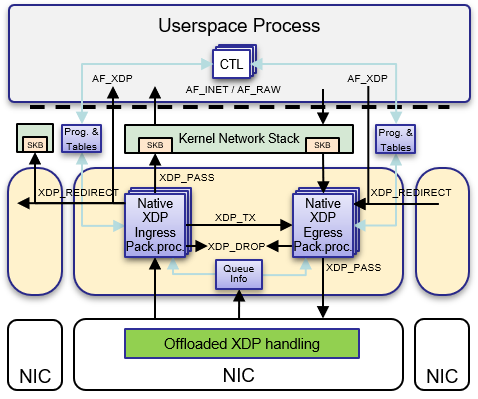

This is done by allowing operations on packets before they are being converted to Kernel Network Buffer descriptors (SKBs) and by implementing a direct user space socket-delivery implementation called AF_XDP. This allows XDP to work in concert with existing network stack without kernel modifications:

⦁ It provides an in-kernel alternative, that is more flexible

⦁ It doesn’t steal the entire NIC!

XDP has two main components: data plane and control plane. The data plane is as described implemented in the kernel as XDP core and is called from Network Device drivers. It has a programmable core (based on an eBPF VM) extended with fixed helper functions and maps, that returns different 'exit' result codes that can direct packet locations:

- send to the normal 'core local' kernel stack (XDP_PASS)

- send to the Transmit side of the current NetDev (XDP_TX)

- redirect to 'remote core' kernel stack (XDP_REDIRECT with CPU_MAP)

- redirect to AF_XDP (XDP_REDIRECT with XSK_MAP)

- redirect to another NetDev (XDP_REDIRECT with NETDEV_MAP)

- or just drop the packet (XDP_DROP)

The control plane is located in the user space and can control the data plane through loading the eBPF based program and then simply attaching the eBPF file-descriptor handle to the Network Device (NetDev) and controlling that via changing eBPF maps (sort of tables).

eBPF bytecode (and map-creation) all go-through BPF-syscalls and can be created by LLVM and loaded in the kernel by two different methods: via bcc-tools or libbpf.

At this moment only Ingress XDP and AF_XDP are implemented in Linux Kernel 4.8 and higher but work is underway for Egress XDP, in and output queue information sharing and multiple eBPF programs on one Network Device. Those are all developments steps needed to implement a kernel level vSwitch. More information can be found on the XDP project website.

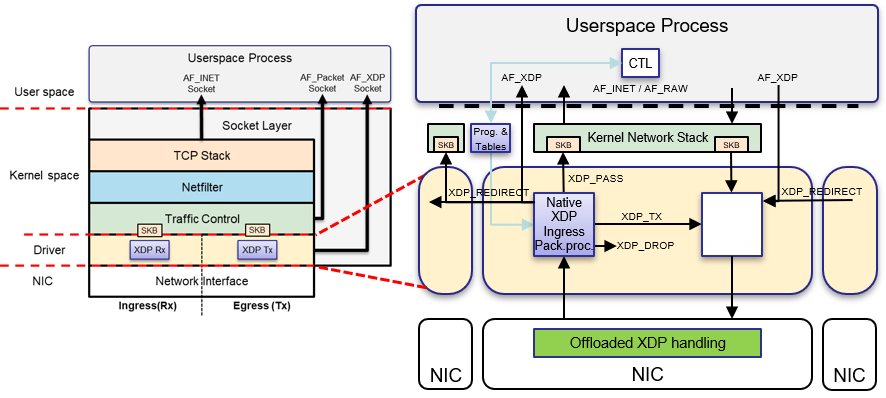

There are three implementation types (hooks) of XDP Core: Generic Mode XDP, Native Mode XDP and XDP Offload to NIC

The first mode is Generic Mode XDP. This mode is a XDP hook called from netif_receive_skb() so that means that it processes packets after packet DMA transfer to SKB and skb allocation completed. It also makes for larger number of instructions executed before running the XDP program but it is Network Device Driver independent.

Native Mode XDP is a driver hook available just after the DMA transfer of packets from the NIC to the NIC driver. It processes packets before SKB allocation - so no waiting for memory allocation! In this way the smallest number of instructions are executed before running the XDP program in eBPF. Not all drivers have implemented Native Mode XDP but at the time of writing this blog already most used NIC and other drivers (like vEth and virtio) have implemented Native XDP

The 3rd mode is XDP Offload to NIC mode. This mode is still under development and is at this moment only (partially) implemented in a select number of NICs but it will give the highest performance because the XDP program is executed in de NIC itself on separate computing chips than the host OS.

It is totally clear that only the latter two modes should be used in my proposed vSwitch design.

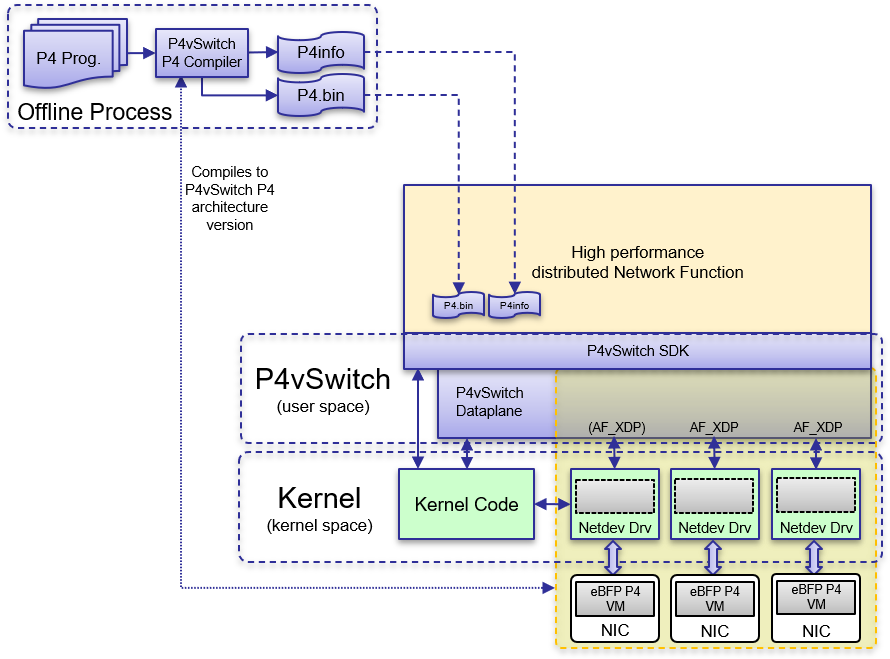

Possible design of a P4 vSwitch

Combining the possibilities of XDP and AF_XDP and the programmable switch pipeline from earlier we could perfectly well implement the pipeline in eBPF and with XDP helpers and maps.

That is not a totally new idea as it is already implemented in user space by Tomasz Osiński and colleagues of Orange labs Poland in a patched version of Open vSwitch.

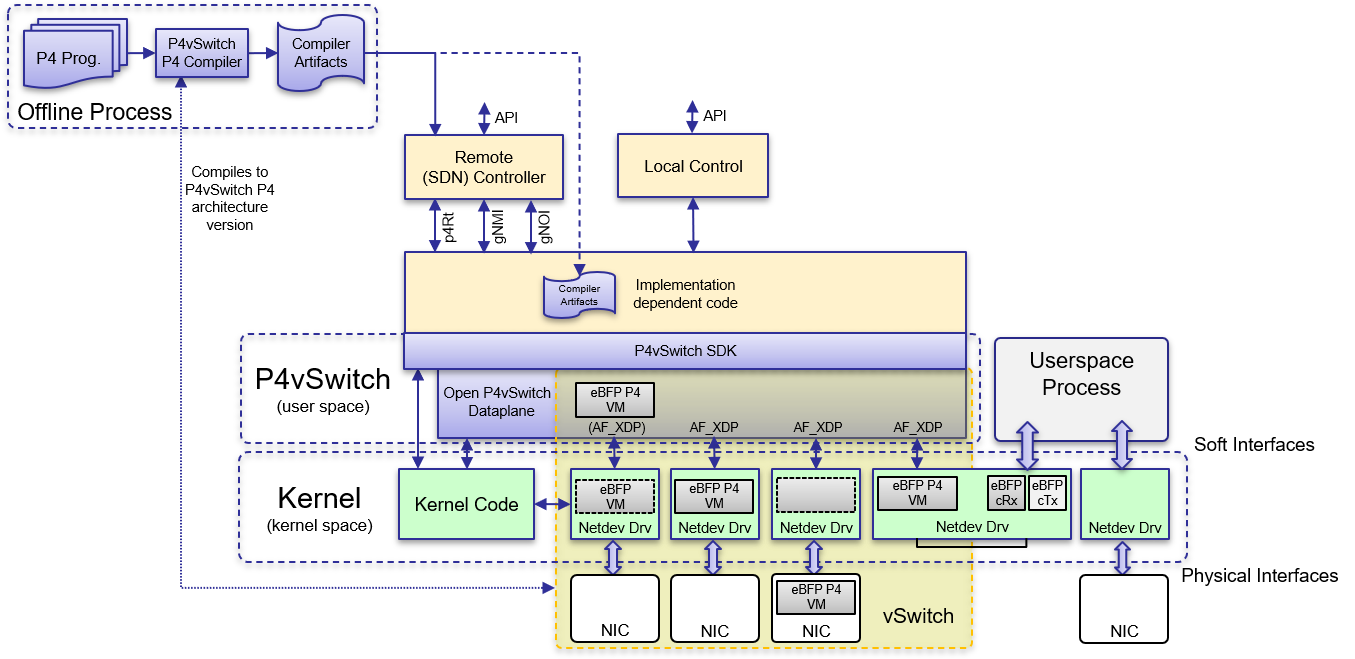

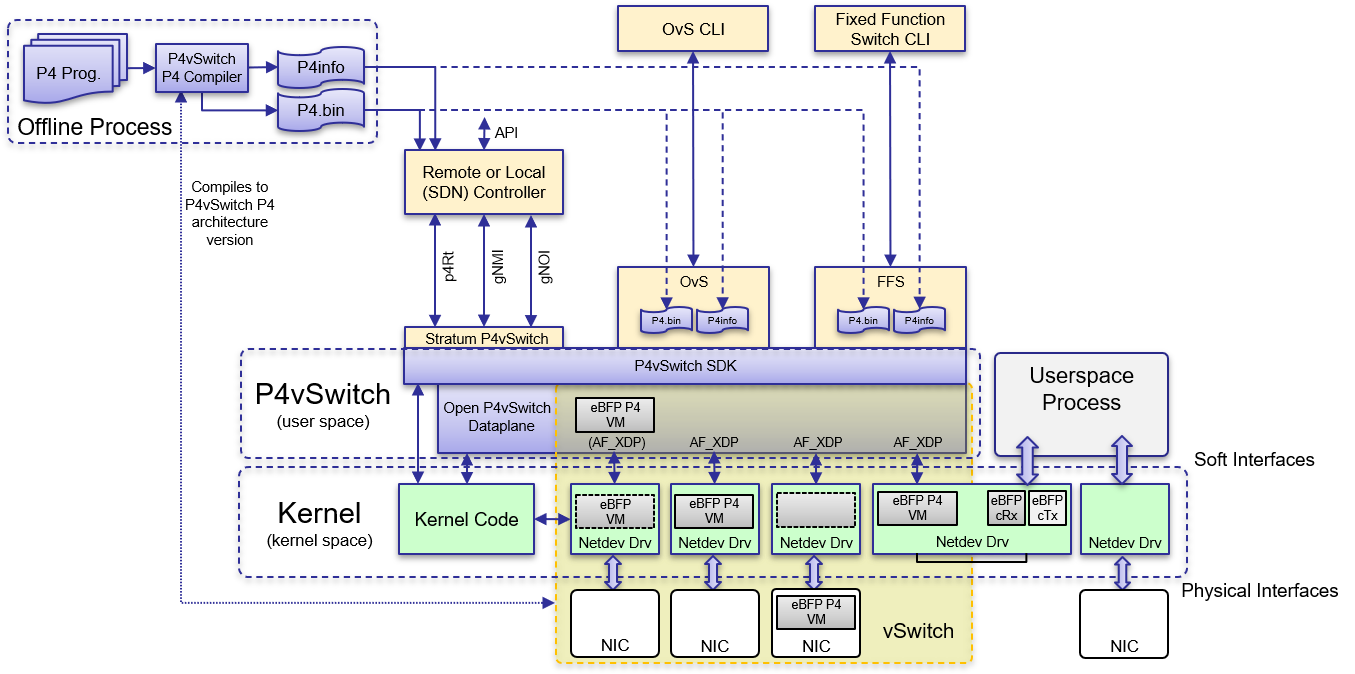

What is different from that implementation is the possibility to implement the pipeline per NetDev queue/stream in user space, kernel NetDev driver or even in the NIC hardware. This three layer processing could be implemented step-by-step in time without the implementation dependant code knowing what is happening within the p4vSwitch library!

Another important layer in the architecture is the P4 vSwitch compiler and the eBPF/uBPF generated code plus used/available helper functions (externs) to the P4 program (as depicted with the dotted line from compiler to the yellow vSwitch rectangle). That could be totally independently developed on the possibilities the generic p4vSwitch data plane gives.

It also opens up the P4vSwitch ecosystem in a very flexible way as user program, data plane and control implementations are totally decoupled and as such could be developed further totally separated from each other.

For example a Stratum based P4vSwitch implementation could be build next to an Open vSwitch implementation. But most importantly also specific high performance network functions implementations that offloads data plane handling to a P4 program running in underlying NIC hardware, could be build on top of the P4Switch library and multi language SDK. All without needing to know the implementation details!

Longer term needed developments on XDP

Although it looks like hallelujah, there are still some missing components to implement a full blown combined user space/kernel/NIC offload based XDP P4vSwitch. Next to the current working items, still missing are extended queueing information, Clone and Multicast possibilities and several recirculation options. Those are already identified on the XDP developers site but are put lower on the implementation wish list.

Another important thing is offloading to NIC hardware. Standardisation and implementation on that front is in its early stages and its not clear how it will look like but the intention is that the environment in the NIC will look like XDP running in the driver.

Proof of concept: go-p4vswitch

As I'm more of a 'hands-on' trial and fail fast guy, one of the first things I started writing was a proof-of-concept (POC) implementation to get experience with my ideas using XDP, AF_XDP and a user space data plane.

For that I've implemented a vSwitch data plane implementation written in Go, that is using a home written AF_XDP implementation and the open source uBPF + P4C compiler C code developed by the wonderful people of Orange Labs Poland.

For this (non optimized) proof of concept, speed was not the first on the requirements list but the throughput results show a reasonable fast implementation, like in the neighbourhood of full 2x 10 gbit line rate 1000-1500 byte large packets between my two Supermicro SYS-E300-8D, 4 core Xeon D-1518 test systems. Tests with 64 byte packets on 2x 10Gbit show a maximum throughput before dropping packets in the neighbourhood of 4-4.5Mpps.

And to be honest looking backwards: that was fairly easy to implement. As I need some more time to clean up the code and do some more thorough tests, the implementation details and test results will be published in a next article in this blog series!

Summary

In this article I explained why I think there is room for a P4vSwitch 'library' implementation. Some more in-depth information about XDP and AF_XDP is given. It then showed my preferred P4vSwitch architecture and gave a peek into my next blog about my POC implementation of P4vSwitch. If you have remarks or want more info, please contact me through Twitter, LinkedIn or email (blog at tolsma.net)

More information:

[1] Fast Packet Processing with eBPF and XDP: Concepts, Code, Challenges, and Applications

[2] XDP - eXpress Data Path: XDP now with REDIRECT; Jesper Dangaard Brouer, Principal Engineer, Red Hat Inc.; LLC - Lund Linux Conf Sweden, Lund, May 2018

[3] XDP (eXpress Data Path) as a building block for other FOSS projects; Magnus Karlsson (Intel), Jesper Brouer (Redhat); FOSDEM 2019, Brussels

[4] Making Networking Queues a First Class Citizen in the Kernel; Magnus Karlsson & Björn Töpel (Intel), Jesper Dangaard Brouer & Toke Jakub Kicinski (Netronome), Maxim Mikityanskiy (Mellanox), Andy Gospodarek (Broadcom); Linux Plumbers Conference Lisbon, Sep 2019